Topics covered

This post explores the evolution from manual code review to automated security testing, covering:

- The reality of manual code review and its limitations

- Understanding vulnerabilities vs weaknesses

- How SAST tools work under the hood

- Taint analysis and data flow tracking

- Sink-to-source vs source-to-sink methodologies

- Mitigation strategies: whitelisting vs blacklisting

- Dealing with false positives in practice

- Choosing and implementing SAST tools at scale

- The complementary relationship between manual and automated testing

It’s 3 AM. You’re on your fifth cup of coffee, eyes bloodshot, staring at line 2,847 of a 10,000-line pull request. Somewhere in this maze of curly braces and semicolons lurks a SQL injection vulnerability that could bring down your entire application. Welcome to the glamorous world of manual code review!

The Manual Code Review Saga: A Hero’s Journey

Manual code review is like being a detective in a noir film, except instead of femme fatales and smoky bars, you’re dealing with nested for-loops and callback hell. You’re hunting for that one line where a developer thought “What could possibly go wrong?” right before concatenating user input directly into a SQL query.

# The developer's thought process:

# "I'll sanitize this later" (Narrator: They didn't)

query = f"SELECT * FROM users WHERE name = '{user_input}'"

The traditional approach involves several stages of caffeinated contemplation:

Manual review has its charm. You develop an intimate relationship with the codebase, understanding not just what the code does, but why that junior developer thought using eval() on user input was a stellar idea. You become fluent in reading between the lines, spotting patterns like the infamous “TODO: Add authentication” comment that’s been there since 2019, variable names like temp, temp2, and tempFinal (spoiler: it’s never final), or that one function that’s 500 lines long because “it works, don’t touch it.”

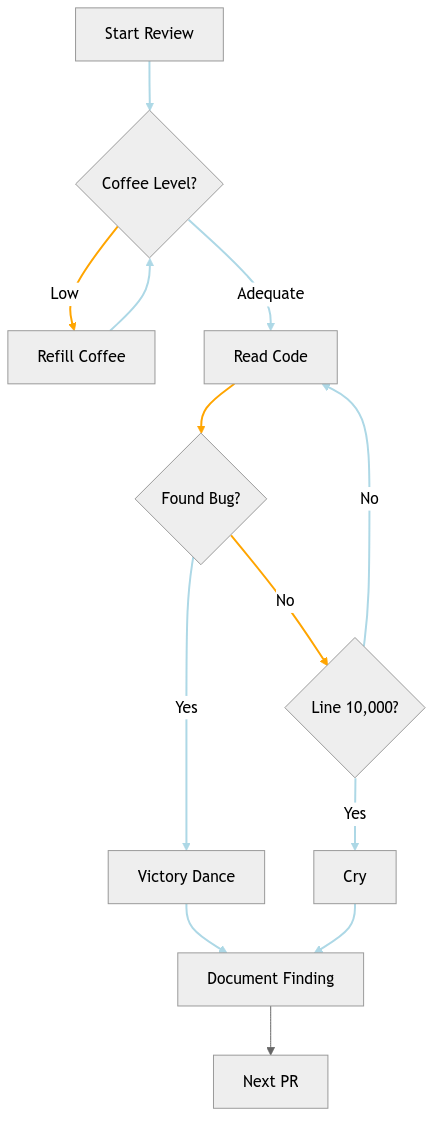

Vulnerabilities vs Weaknesses: Know Your Enemy

Before we dive deeper, let’s clear up some confusion. In the security world, we throw around terms like “vulnerability” and “weakness” as if they’re interchangeable. Spoiler alert: they’re not.

Weakness: The Potential Problem

A weakness is like leaving your window unlocked. It’s a flaw in your code that could be exploited, but there might be other factors preventing actual exploitation. Think of it as a bad coding practice or design flaw:

# Weakness: Using MD5 for password hashing

password_hash = hashlib.md5(password.encode()).hexdigest()

# It's weak, outdated, but might not be immediately exploitable

Vulnerability: The Actual Problem

A vulnerability is when that unlocked window is on the ground floor, facing a dark alley, with a “Rob Me” sign. It’s a weakness that can actually be exploited in your specific context:

# Vulnerability: SQL injection that's actually exploitable

user_id = request.args.get('id')

query = f"SELECT * FROM users WHERE id = {user_id}" # 💣

db.execute(query) # Direct path to exploitation

The relationship? Every vulnerability is a weakness, but not every weakness is a vulnerability. It’s like squares and rectangles, but with more potential for disaster.

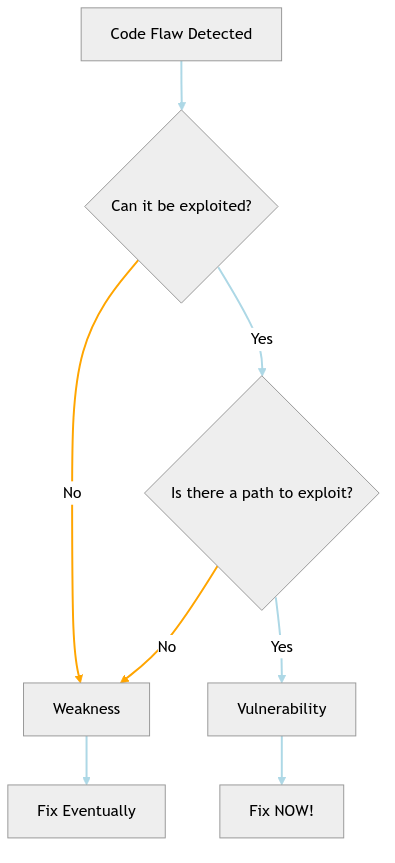

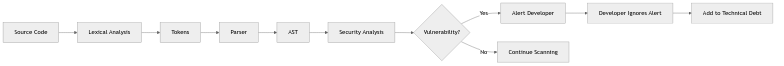

Enter SAST: When Robots Join the Hunt

Just when you thought you’d spend the rest of your career squinting at code, along comes Static Application Security Testing (SAST) think of it as hiring a tireless robot army to do your code review. These digital detectives can scan millions of lines of code faster than you can say “SQL injection.”

SAST tools work by deconstructing your code into something computers can understand and analyze. It’s like teaching a very literal-minded robot to be paranoid about security:

The magic happens in several phases:

Phase 1: Tokenization (Breaking Bad… Code)

First, the SAST tool breaks your beautiful code into tokens. It’s like a paper shredder, but more productive:

// Your code:

if(password.length < 8) { alert("Too short!"); }

// What SAST sees:

[KEYWORD:if] [PUNCT:(] [ID:password] [PUNCT:.] [ID:length]

[OP:<] [LITERAL:8] [PUNCT:)] [PUNCT:{] [ID:alert]

[PUNCT:(] [STRING:"Too short!"] [PUNCT:)] [PUNCT:;] [PUNCT:}]

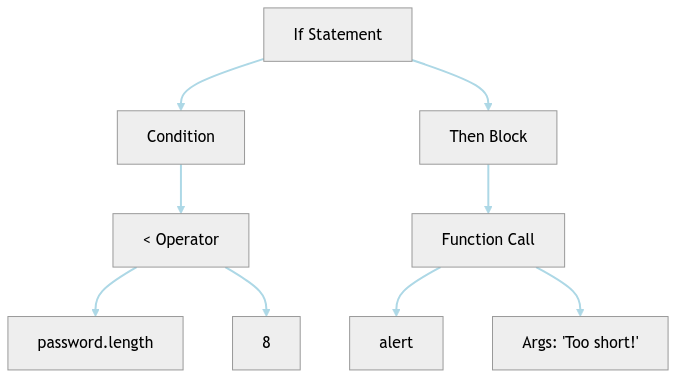

Phase 2: Building the Abstract Syntax Tree (AST)

Next, these tokens are assembled into an Abstract Syntax Tree basically a family tree for your code, showing how all the pieces relate:

This tree structure lets SAST tools understand code relationships without getting bogged down in syntax details. It’s like having X-ray vision for code structure!

The Great Methodology Showdown: Sink-to-Source vs Source-to-Sink

Here’s where things get spicy. SAST tools use two main approaches to find vulnerabilities, and they’re as different as searching for your keys by either starting at the door (where you need them) or at your pocket (where they should be).

But first, let’s understand the fundamental concepts. In security analysis, we talk about “sources” and “sinks”:

Sources are where potentially dangerous data enters your application - think user input fields, file uploads, API calls, or any external data. It’s like the front door of your house where strangers might enter.

Sinks are where this data can cause damage - database queries, system commands, HTML output, or file operations. These are the valuable things in your house that you don’t want strangers touching.

The vulnerability happens when untrusted data flows from a source to a sink without proper validation or sanitization. It’s like letting a stranger walk from your front door straight to your safe without checking who they are.

The Taint Analysis Fundamentals

Now that we understand sources and sinks, let’s see how SAST tools track the flow between them. Tainted data is any data that’s controlled by:

- Users (the creative folks who try

'; DROP TABLE users; --as their username) - External services (APIs that might return malicious payloads)

- Files (especially those uploaded by users)

- Environment variables (sometimes compromised)

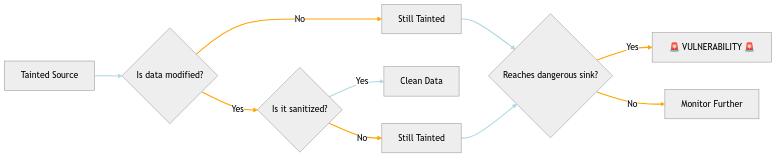

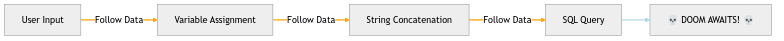

Once data is identified as tainted, SAST tools track it like a bloodhound:

The tracking process follows these rules:

- Taint Introduction: Data from untrusted sources is marked as tainted

- Taint Propagation: Any operation using tainted data produces tainted results

- Taint Checking: When tainted data reaches a sensitive operation (sink), flag it

- Taint Removal: Only proper sanitization removes the taint

// Taint introduction

String userInput = request.getParameter("name"); // TAINTED!

// Taint propagation

String message = "Hello, " + userInput; // Still TAINTED!

String upper = message.toUpperCase(); // Still TAINTED!

// Taint reaches sink = vulnerability

db.execute("INSERT INTO logs VALUES ('" + upper + "')"); // 💥 BOOM!

// Proper sanitization removes taint

String safe = sanitizeSQL(userInput); // Clean!

db.execute("INSERT INTO logs VALUES ('" + safe + "')"); // Safe!

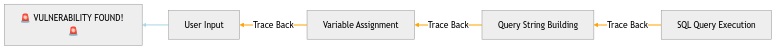

Sink-to-Source: The Backwards Detective

This approach starts at the dangerous spots (sinks) and works backwards:

Think of it as starting at the crime scene (a dangerous function like executeQuery()) and following the evidence trail back to the perpetrator (user input). This method is like a security guard who only checks people leaving the building with suspicious packages very focused, very effective, but might miss the guy who entered with a crowbar.

Real-world example:

// Step 4: The crime scene (sink)

db.executeQuery(query); // 🚨 SAST starts here

// Step 3: The accomplice

String query = "SELECT * FROM users WHERE id = " + userId;

// Step 2: The handoff

String userId = request.getParameter("id");

// Step 1: The source of all evil

// User sends: ?id=1'; DROP TABLE users; --

When to use sink-to-source:

- Security audits with time constraints

- Focusing on critical vulnerabilities only

- When you know which sinks are most dangerous in your app

- Compliance checks for specific vulnerability types

Source-to-Sink: The Forward Thinker

This approach follows data from entry points through the application:

It’s like following a suspicious character from the moment they enter your application to see if they eventually do something naughty. More comprehensive but also more prone to false alarmsà like following everyone who enters a bank because they might rob it.

When to use source-to-sink:

- Development phase (catch everything early)

- When you need comprehensive coverage

- For understanding all data flows in your application

- When building security test cases

Sources and Sinks: The Usual Suspects

In the SAST world, we categorize code elements into sources (where evil enters) and sinks (where evil does damage).

Sources (The Entry Points of Doom)

The usual suspects include request.getParameter() for user input (the root of all evil), System.getenv() for environment variables (sometimes evil), new FileReader() for files (potentially evil), database reads for data from others (probably evil), and API responses for external data (definitely suspicious).

Sinks (The Danger Zones)

The danger zones are where bad things happen: executeQuery() becomes SQL injection paradise, eval() is the “please hack me” function, innerHTML opens up XSS wonderland, system() creates command injection central, and new FileWriter() hosts the path traversal party.

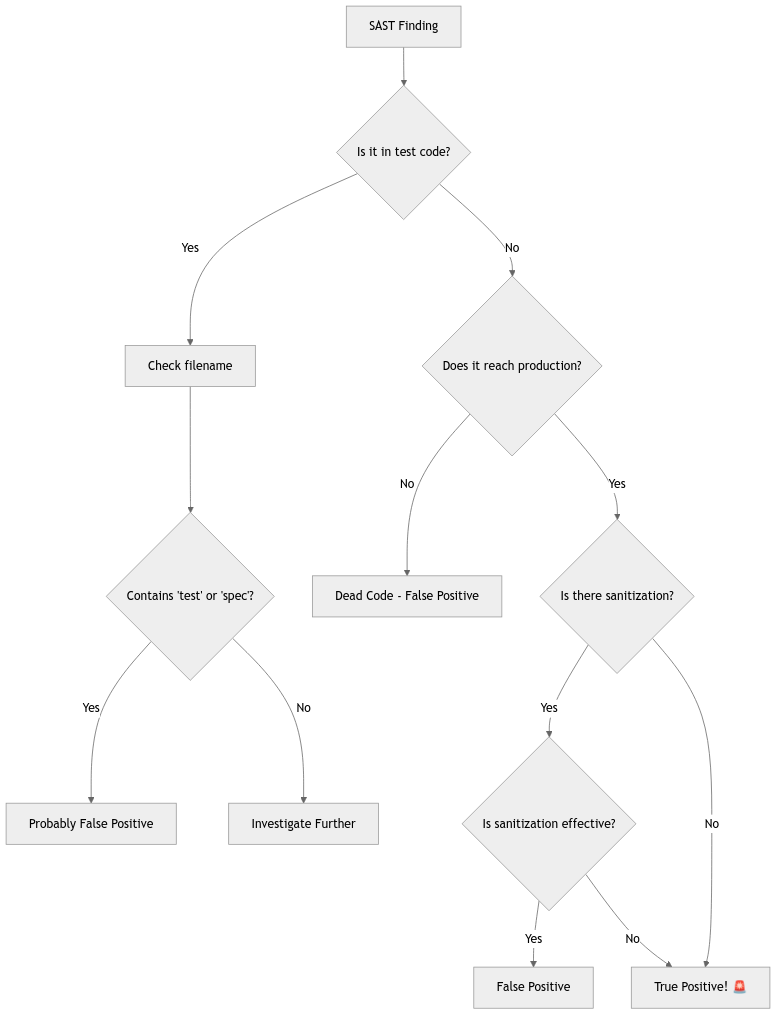

The False Positive Fiasco: When SAST Cries Wolf

Here’s the dirty secret about SAST tools: they’re paranoid. Like, “the-government-is-watching-me-through-my-toaster” level paranoid. This leads to the infamous false positive problem.

Common False Positive Patterns

- The Framework Protection Blindness

# SAST says: "XSS vulnerability!"

return render_template('user.html', username=user_input)

# Reality: Flask auto-escapes this. You're fine.

- The Test Code Panic

// SAST says: "HARDCODED CREDENTIALS! DEFCON 1!"

const TEST_API_KEY = "definitely-not-production-key";

// Reality: It's in test_utils.js. Chill out, robot.

- The Dead Code Alert

if (false) {

// SAST says: "SQL injection!"

db.query("SELECT * FROM users WHERE id = " + userInput);

}

// Reality: This code never runs. It's deader than disco.

The Art of False Positive Triage

Determining if a finding is a false positive requires the analytical skills of Sherlock Holmes combined with the patience of a saint:

Real-World Example: The Case of the Overzealous Scanner

Let me tell you about Jenkins and the CVSS 9.8 vulnerability that SAST actually found. The args4j library had this neat “feature” where arguments starting with “@” were treated as file paths. So @/etc/passwd would helpfully read your password file.

// What developers saw: Harmless argument parsing

args4j.parseArgument(userArgs);

// What attackers saw: File reading bonanza

// userArgs = ["@/etc/passwd", "@/secret/keys"]

No individual code review would catch this because it required understanding the library’s documentation (who reads those, right?). But SAST tools that analyze third-party dependencies? They caught it.

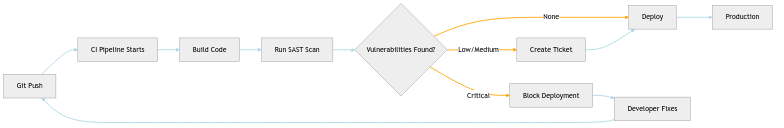

Implementing SAST Without Losing Your Mind

The CI/CD Integration Dance

Integrating SAST into your pipeline is like teaching your CI/CD to be a bouncer at a nightclub:

The Configuration Chronicles

Setting up SAST is like tuning a guitar get it wrong, and everyone suffers:

# .sast-config.yml

exclude_paths:

- "**/test/**" # Don't scan tests

- "**/vendor/**" # Don't scan dependencies

- "**/*.min.js" # Don't scan minified code

custom_rules:

- id: company-special-sauce

pattern: |

$X = dangerousCompanyFunction(...)

message: "Our special function needs special handling"

severity: WARNING

false_positive_markers:

- "# nosec" # Developer knows best (famous last words)

- "// SAST-IGNORE" # For when you're really sure

The Evolution: From Pattern Matching to AI Overlords

Modern SAST has evolved from simple pattern matching to sophisticated analysis:

Generation 1: The Regex Rangers

/system\s*\([^)]*\$_(GET|POST)/

“If it looks like command injection, it probably is!”

Generation 2: The Flow Followers

Taint tracking that follows data like a bloodhound:

User Input → Variable A → Function B → Variable C → exec() = 💥

Generation 3: The SAST Awakening

Machine learning that reduces false positives by learning from your triage decisions. It’s like having a SAST tool that actually listens when you say “No, that’s fine, we sanitize that!”

The Bottom Line: Practical SAST Strategy

After all this technical deep-diving, here’s what actually works. Start small - don’t scan your entire monolith on day one. Pick one service, tune it, learn from it. Embrace the hybrid approach by using source-to-sink for comprehensive coverage during development, then switch to sink-to-source for focused security audits, while keeping manual review for business logic and architectural decisions.

Manage your expectations because SAST won’t find everything. It’s terrible at authentication flaws (“Is this login secure?” 🤷), business logic bugs (“Should users order -1 pizzas?” 🍕), and race conditions where timing is everything. Track your metrics with simple formulas: Success Rate = True Positives / Total Findings, Developer Happiness = 1 / False Positive Rate, and Security Posture = Vulnerabilities Fixed / Time Spent.

Choosing Your SAST Weapon: A Strategic Guide to Tool Selection

Selecting a SAST tool for your organization is like choosing a car sure, a Ferrari sounds cool, but if you need to haul furniture, you probably want a truck. Let’s break down how to make a choice that won’t have you explaining to your CTO why you spent $100k on a tool that only speaks COBOL.

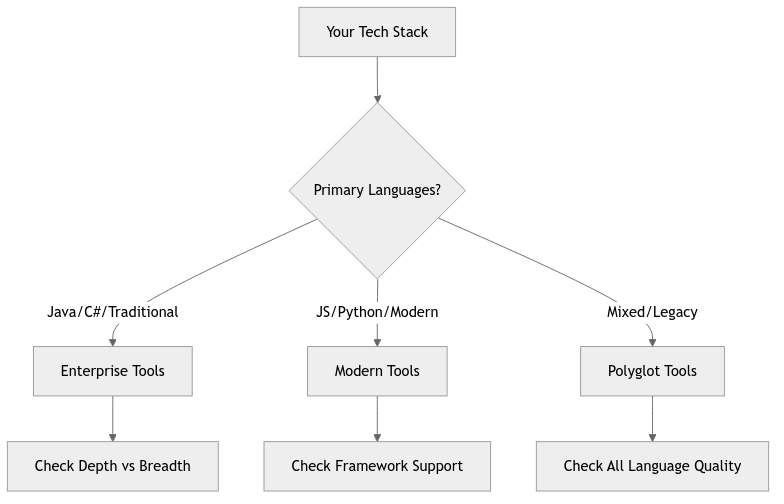

The Language Coverage Conundrum

First things first: does it speak your language? And I don’t mean English vs. French (though documentation matters too).

Key questions revolve around comprehensive support - does it handle ALL your languages, or will you need multiple tools? Consider the depth of support, distinguishing between basic syntax checking and semantic analysis. Framework understanding becomes crucial, whether you’re using React, Spring, Django, or others. And don’t forget about that weird proprietary language from 2003 that somehow still runs critical infrastructure.

Here’s a fun fact: even the worst SAST tools somehow manage to work decently with Java codebases. It’s like Java is the vanilla ice cream of programming languages - every SAST vendor makes sure it works because it’s what enterprise customers expect. Your cutting-edge Rust microservice? Your clever Kotlin coroutines? Good luck with that. But that 15-year-old Java monolith? Every tool has you covered!

Taint Analysis Capabilities: Following the Dirty Data

Not all taint analysis is created equal. Here’s what separates the wheat from the chaff.

Basic taint tracking follows direct variable assignments, performs single-file analysis, and traces simple source-to-sink paths. It’s functional but limited. Advanced taint analysis steps up the game with inter-procedural analysis that works across functions, cross-file tracking capabilities, field-sensitive analysis that tracks object properties, and context-sensitive understanding of calling contexts.

The elite-tier analysis tools handle the really complex stuff: reflection and dynamic code, tracking through databases and external storage, understanding framework-specific propagation patterns, and supporting custom taint propagation rules. This level of sophistication makes the difference between finding obvious vulnerabilities and catching the subtle ones that manual review might miss.

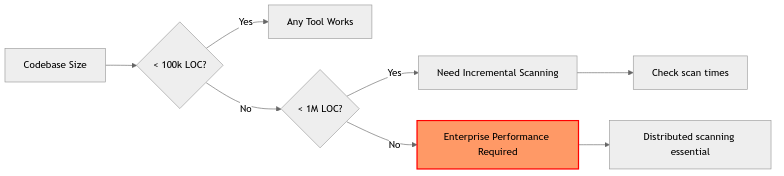

Performance at Scale: The Need for Speed

When you’re scanning millions of lines of code, performance matters:

Performance considerations scale with your codebase. You’ll need to evaluate full scan time on your largest repository and ensure the tool has incremental scan capabilities for daily use. Parallel processing support becomes crucial for large codebases, and you’ll need to compare cloud versus on-premise performance based on your infrastructure. Don’t forget about CI/CD timeout considerations - if your pipeline times out before SAST completes, you’ve got a problem.

Integration Ecosystem: Playing Nice with Others

Your SAST tool needs to fit into your existing workflow like a puzzle piece, not a sledgehammer.

IDE integration provides real-time feedback while coding, quick fix suggestions, and needs minimal performance impact to avoid frustrating developers. CI/CD pipeline integration requires native plugins for your platform (Jenkins, GitLab, GitHub Actions), configurable quality gates, incremental PR scanning capabilities, and flexible failure threshold configuration.

For ticketing and tracking, you’ll want seamless integration with Jira, GitHub Issues, or Azure DevOps, along with deduplication across scans to avoid duplicate tickets. Trend tracking and metrics help demonstrate improvement over time, while SLA management ensures critical vulnerabilities get addressed promptly.

The Cost-Benefit Analysis Matrix

Let’s talk money and value:

| Factor | Open Source | Commercial | Enterprise |

|---|---|---|---|

| Initial Cost | $0 | $10-50k/year | $50k-500k/year |

| Hidden Costs | High (DIY setup) | Medium | Low |

| Support | Community | Vendor | Dedicated team |

| Customization | Full control | Limited | Extensive |

| Scaling | Manual | Some automation | Full automation |

Strategic Implementation Approaches

The Gradual Rollout 🐌

Start with a pilot on one team’s project for the first two weeks, then spend weeks 3-4 tuning rules and reducing false positives. Expand to similar projects in weeks 5-8, achieve department-wide rollout by week 12, and plan for organization-wide adoption from month 4 onwards. This approach minimizes disruption and allows learning from each phase.

The Big Bang 💥

Enable SAST for everything on day 1, spend days 2-30 drowning in findings, frantically tune rules from days 31-90, and by day 91 realize that gradual rollout would have been better. We don’t recommend this approach, but it’s surprisingly common.

The Hybrid Approach 🎯

Start with critical applications like payment processing and authentication systems. Use strict rules for new code but relaxed rules for legacy systems. Gradually increase coverage and strictness over time, applying different rules for different risk levels. This balances security needs with practical constraints.

Making the Decision: Your SAST Selection Checklist

must_haves:

- language_coverage:

- covers_80_percent_of_codebase: true

- framework_understanding: true

- performance:

- incremental_scanning: true

- scan_time_under_ci_timeout: true

- integration:

- ci_cd_plugins: true

- ide_support: true

nice_to_haves:

- advanced_analysis:

- cross_file_taint: true

- custom_rules: true

- reporting:

- trend_analysis: true

- compliance_mapping: true

- support:

- vendor_support: true

- active_community: true

red_flags:

- no_incremental_scanning: true

- poor_documentation: true

- vendor_lock_in: true

- no_api_access: true

The Reality Check

No SAST tool is perfect. Here’s what to actually expect.

False positive rates of 40-75% are normal, regardless of vendor promises of “near-zero false positives.” Onboarding time typically takes 3-6 months to properly tune the tool for your specific codebase and development patterns. Developer training requires budgeting 2-4 hours per developer for initial training and ongoing education. Maintenance is an ongoing commitment - plan for continuous rule tuning, updates, and adjustments as your codebase evolves.

Remember: the best SAST tool is the one your developers will actually use. A technically inferior tool with great UX and integration beats a powerful tool that everyone avoids.

Choose wisely, implement gradually, and always remember: SAST is a marathon, not a sprint. Unless your sprint is three months long, in which case you have bigger problems.

Glossary: Speaking SAST

- AST (Abstract Syntax Tree): Your code’s family tree, showing how all parts are related without the syntactic sugar.

- Control Flow Graph: A map of all possible paths through your code, like a choose-your-own-adventure book for programs.

- Data Flow Analysis: Following data through your application like a detective following a suspect.- False Positive: When SAST cries wolf. The vulnerability equivalent of a fire alarm triggered by burnt toast.

- Sanitizer: Code that cleans dangerous input, like a bouncer checking IDs at the door.

- Sink: Where dangerous operations happen. The business end of a vulnerability.

- Source: Where untrusted data enters. The “here be dragons” entry points.

- Taint Analysis: Tracking “dirty” data through your application, like following muddy footprints.

- Taint Propagation: How contaminated data spreads through variables and functions, like a game of security hot potato.

- True Positive: An actual vulnerability. The “oh shit” moment that justifies your security budget.

Remember: SAST isn’t about replacing human intelligence it’s about augmenting it. Let the robots handle the mundane pattern matching while you focus on the complex security decisions that require actual thought. And maybe, just maybe, you’ll get to finish that coffee while it’s still hot.

Happy hunting, and may your vulnerabilities be few and your false positives fewer!

Conclusion: The Human Touch in a Robot World

Let’s be crystal clear about something: a manual code audit is not just running a SAST tool and calling it a day. That’s like saying cooking is just putting ingredients in a microwave. Sure, both involve food and heat, but one creates culinary art while the other just reheats yesterday’s pizza.

Manual code review brings something SAST tools fundamentally cannot: context, creativity, and common sense. While SAST excels at finding that SQL injection on line 1,337, it completely misses that your authentication logic allows anyone named “admin” to bypass password checks. It won’t catch that your business logic allows users to transfer negative amounts of money (congratulations, you’ve invented a money printer!). And it definitely won’t notice that your “secure” random number generator always returns 4 because someone thought they were being funny.

The relationship between manual review and SAST is complementary, not competitive. Think of SAST as your reconnaissance drone - it flies over the entire codebase, quickly identifying obvious threats and mapping the terrain. Manual review is your special forces team that goes in to investigate the really interesting stuff. SAST finds the low-hanging fruit (and there’s usually plenty of it), freeing up human reviewers to focus on complex logic flaws, architectural issues, and those subtle bugs that require actual thinking.

But here’s the catch: SAST can be like that friend who thinks every headache is a brain tumor. The bigger your codebase, the more noise it generates. On a million-line enterprise application, you might get thousands of findings, and if your false positive rate is “only” 25%, you’re still looking at hundreds of wild goose chases. Each false positive takes 10-30 minutes to investigate, and suddenly your “time-saving” tool has generated weeks of work.

The key is finding the right balance. Use SAST for what it’s good at: finding known vulnerability patterns at scale. Configure it properly to reduce noise by excluding test files, adding custom rules, and tuning sensitivity levels. Use manual review for what humans do best: understanding context, business logic, and architectural decisions. Combine both approaches iteratively - let SAST do the first pass, manually review the interesting findings, then dig deeper into areas SAST can’t reach.

Remember, security isn’t about choosing between human intelligence and artificial intelligence - it’s about combining them effectively. SAST tools are force multipliers, not force replacers. They’re the Robin to your Batman, the Watson to your Sherlock, the… well, you get the idea.

So embrace the robots, but don’t forget: at the end of the day, it takes a human to truly understand what makes code not just technically secure, but actually safe for the messy, unpredictable real world where users do things like entering emoji in phone number fields and trying to upload Excel files as profile pictures.

The future of code security isn’t human vs. machine - it’s human AND machine, working together to catch what the other misses. And maybe, just maybe, we’ll finally get to finish that coffee while it’s still hot.

References & Training Resources

Essential Reading

- OWASP Code Review Guide - The comprehensive guide to secure code review

- OWASP Source Code Analysis Tools - Extensive list of SAST tools

- Static Code Analysis | OWASP - Fundamentals of static analysis

Technical Deep Dives

- How to Find More Vulnerabilities - Source Code Auditing Explained - Practical vulnerability hunting techniques

- The Architecture of SAST Tools - GitHub’s technical breakdown

- Performing a Secure Code Review - Step-by-step methodology

- Analyse Statique - French resource on static analysis techniques

Hands-On Training Platforms

OWASP Juice Shop

- juice-shop.herokuapp.com

- The most modern vulnerable web application for security training

- Covers OWASP Top 10 and beyond

- Great for practicing both manual and automated testing

OWASP WebGoat

- owasp.org/www-project-webgoat

- Interactive lessons on web application security

- Step-by-step tutorials for each vulnerability type

- Perfect for beginners

Vulnerability.Codes

- vulnerability.codes

- Real code snippets with actual vulnerabilities

- Language-specific vulnerability examples

- Great for understanding how vulnerabilities look in different contexts

DeepReview.app

- deepreview.app/leaderboard

- Competitive code review challenges

- Real-world vulnerability scenarios

- Leaderboard to track your progress against other security researchers

Additional Resources

- Damn Vulnerable Web Application (DVWA) - Classic vulnerable app for testing

- Security Shepherd - OWASP’s security training platform

- HackTheBox - For when you want to test your skills in realistic environments

- PentesterLab - Exercises from basic to advanced vulnerability exploitation

Remember: The best way to learn is by doing. Start with Juice Shop or WebGoat, break things safely, and gradually work your way up to more complex challenges. And always practice on systems you have permission to test!